Did you know that the FDA has approved nearly 600 AI medical devices as of 2024? Clinical decision support tools are revolutionizing healthcare delivery, with the number of AI and machine learning enabled medical devices reaching 692 in January 2024. This rapid growth—showing annual increases of 39%, 15%, and 14% in 2020, 2021, and 2022 respectively—demonstrates the healthcare industry’s growing reliance on technology for critical decisions.

However, these powerful tools face significant challenges. The dynamic nature of AI algorithms introduces the problem of model drift, making consistent outputs difficult to achieve in bedside scenarios. Additionally, when treating conditions like sepsis—the third leading cause of death worldwide with over ten million casualties annually—reliable clinical decision support becomes literally life-saving. In fact, approximately 30% of studies focused on diabetes care have demonstrated measurable improvements, including 30% reductions in adverse drug events. As a result, understanding how to build stable, reliable systems is crucial for your practice.

Throughout this article, you’ll discover how evidence-based clinical decision support systems can transform your clinical data workflow. We’ll explore how advanced clinical decision support integrates with your cognitive processes, and how solutions like Holisticare.io use explainable AI to analyze biomarkers and generate personalized recommendations. Whether you’re concerned about AI inconsistency or looking to enhance your diagnostic capabilities, this guide will help you navigate the complex landscape of AI in healthcare.

Understanding the Cognitive Workflow of Clinicians

Clinical diagnosis resembles detective work more than scientific methodology. Before clinical decision support systems can effectively augment medical reasoning, we must first grasp how clinicians process information and reach conclusions, particularly in uncertain scenarios.

How doctors make decisions under uncertainty

Medical decision making (MDM) represents the provider’s cognitive work when seeing a patient and forms the most critical component of evaluation and management services [1]. The diagnostic process follows distinct steps: information gathering, hypothesis generation, hypothesis testing, and reflection [2]. During this process, clinicians navigate uncertain situations where they cannot precisely predict risks or expected outcomes.

Two cognitive systems govern clinical decisions. System 1 is fast, automatic, emotional, and operates subconsciously, while System 2 is slow, effortful, logical, and requires conscious thought [2]. Although System 2 generally yields better results through methodical analysis of clinical data, clinicians under pressure often default to System 1 thinking [2]. This becomes especially problematic when handling complex biomarker analysis or interpreting machine learning outputs.

Notably, uncertainty manifests differently across experience levels. While inexperienced clinicians face uncertainty with new procedures, even seasoned doctors confront ambiguity when guidelines conflict or when patient presentations defy typical patterns [3]. Furthermore, studies suggest many clinicians lack insight into their own decision-making processes, with doctors who describe themselves as “excellent decision-makers” and “free from bias” subsequently scoring poorly in formal tests [4].

Common cognitive biases in diagnosis and treatment

Cognitive errors underpin approximately 75% of mistakes in internal medicine practice [5]. These biases affect clinical reasoning at every step from information gathering to verification. The most prevalent biases include:

-

Anchoring bias: Steadfastly sticking to an initial impression while ignoring contradictory evidence [6]

-

Premature closure: Jumping to conclusions without considering all possibilities – one of the most common errors [4]

-

Confirmation bias: Selectively accepting data that support a desired hypothesis while ignoring contradictory information [5]

-

Availability bias: Choosing diagnoses that readily come to mind due to recent or memorable experiences [4]

-

Base rate neglect: Ignoring the true prevalence of disease, inflating or neglecting its actual occurrence rate [2]

Such biases occur regardless of clinician experience but appear more common in experts [6]. Consequently, any AI clinical decision support system must account for these cognitive tendencies rather than reinforcing them.

Why AI-CDS must align with human reasoning

For AI in healthcare to overcome translational barriers, systems must be designed around concrete needs and challenges faced by human decision-makers [6]. Rather than attempting to replace clinical judgment, advanced clinical decision support should augment specific cognitive tasks, boost comprehension, and facilitate critical reasoning [6].

Poorly designed AI tools often deliver irrelevant or confusing information, adding to cognitive burden instead of alleviating it [7]. This proves especially problematic when analyzing complex clinical data from patients with unstable conditions. Effective AI-CDS systems must therefore support clinical reasoning by helping clinicians consider competing hypotheses and test various scenarios [7].

The optimal approach involves tools with ante-hoc interpretability – designed to be understandable from the outset rather than providing post-hoc explanations of black-box decisions [7]. Systems like Holisticare.io embody this philosophy, offering explainable AI that generates personalized diet and exercise recommendations from over 800 biomarkers while making the reasoning process transparent to clinicians.

To gain clinician trust, such systems must blend seamlessly into existing workflows, respecting the team-based nature of medical decision-making that often occurs under time constraints and uncertainty [7]. Only then can machine learning truly enhance rather than disrupt the complex cognitive workflow of clinical reasoning.

Designing AI Clinical Decision Support for Human-AI Collaboration

Effective clinical decision support doesn’t replace human expertise—it enhances it through strategic collaboration. Understanding different models of human-AI partnership is essential for developing systems that deliver consistent, reliable outputs at the bedside.

Assistance vs augmentation vs automation models

Human-AI integration in healthcare follows three distinct approaches. First, automation handles tasks without direct human input, functioning as an additional information source or making prescriptive decisions monitored by clinicians [8]. Second, assistance (human-in-the-loop) supports clinicians with descriptive or predictive modeling, providing insights for review [9]. Third, augmentation (machine-in-the-loop) streamlines tasks that remain primarily human, complementing clinicians’ abilities while presenting multiple choices with their consequences [9].

A Delphi survey among data science experts revealed task-specific consensus on appropriate levels of AI integration [8]:

-

Monitoring patient data: 63.6% favored full automation (level 4)

-

Documentation: 90.1% supported automation with human assistance (level 3)

-

Analyzing medical data: 81.8% preferred AI autonomy with human reliability checks (level 3)

-

Medication prescribing and diagnosis: 81.8% advocated for human-led augmentation (level 2)

-

Patient interaction: 90.1% agreed it cannot be augmented or automated [8]

This human-first approach creates joint cognitive systems achieving better outcomes than either humans or AI could independently [8].

Machine-in-the-loop design for shared decision-making

Unlike technology-driven initiatives, machine-in-the-loop designs prioritize human judgment while enhancing it with computational power. This model recognizes that even with advanced clinical data analysis, certain tasks require the context, empathy, and ethical reasoning only humans provide [3].

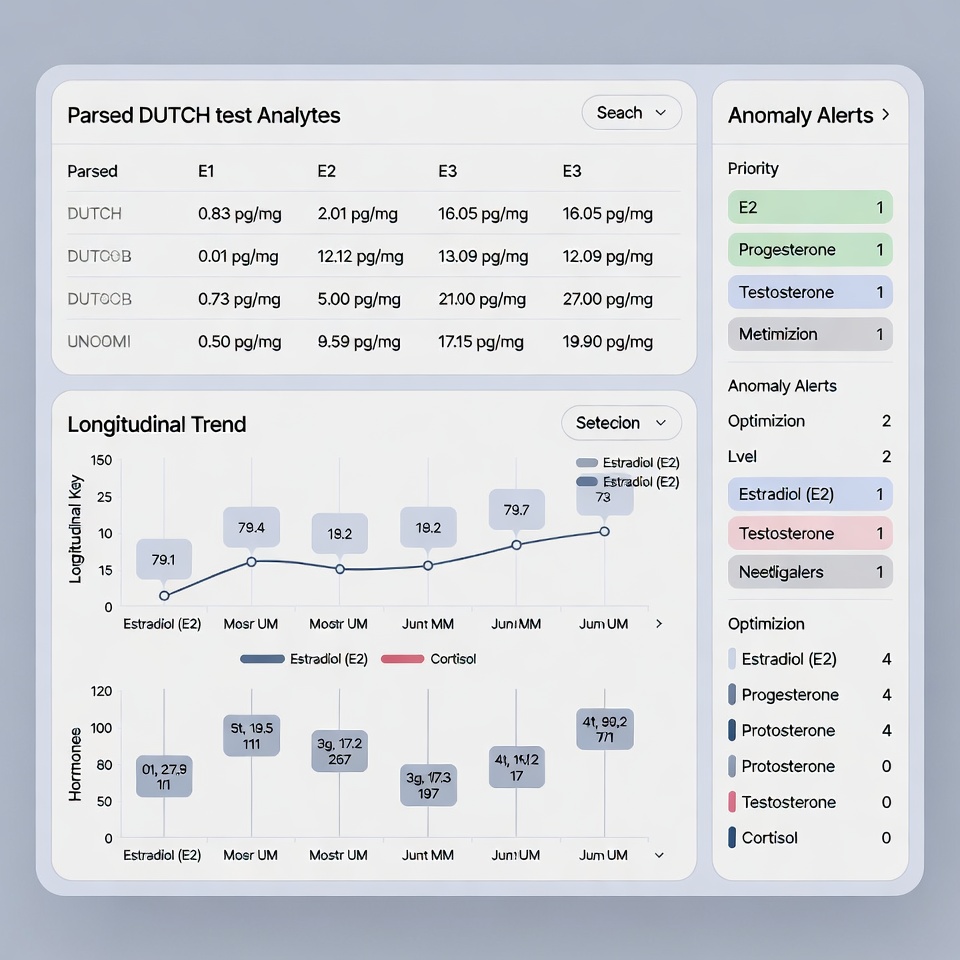

Shared decision-making becomes particularly vital when analyzing biomarkers and generating treatment recommendations. For instance, holisticare.io employs this approach for its explainable AI that processes 800+ biomarkers to create personalized diet and exercise plans. The system presents clinicians with multiple options and their rationales, maintaining human oversight while reducing cognitive load from massive data processing.

Research demonstrates that clinicians want to “stay in the driver’s seat” with AI assistance [8], especially for complex biomarker analysis where machine learning can identify patterns humans might miss.

Supporting mental simulation and belief updating

Successful evidence-based clinical decision support must align with how clinicians form and update beliefs. Clinical decisions involve mental simulation—running through potential scenarios and outcomes before action [10]. Advanced clinical decision support systems can facilitate this process by presenting alternative treatment paths with anticipated outcomes.

Moreover, AI can support belief updating—the revision of existing hypotheses when new clinical data emerges [10]. This proves crucial in unstable conditions where patient status changes rapidly. By analyzing temporal trajectories rather than point-in-time predictions, AI can provide more consistent outputs [11].

Nevertheless, empirical research shows that explanations paradoxically increase reliance on AI recommendations regardless of their quality—improving decision accuracy by 4.3 percentage points when correct but decreasing it by 4.6 points when incorrect [11]. This highlights why transparency must be carefully designed to support rather than override clinical judgment.

Stabilizing AI Outputs Through Context-Aware Modeling

Consistency remains the cornerstone of any reliable clinical decision support system. Unlike traditional static algorithms, context-aware AI models recognize that medical conditions evolve over time, requiring systems that adapt to changing patient circumstances.

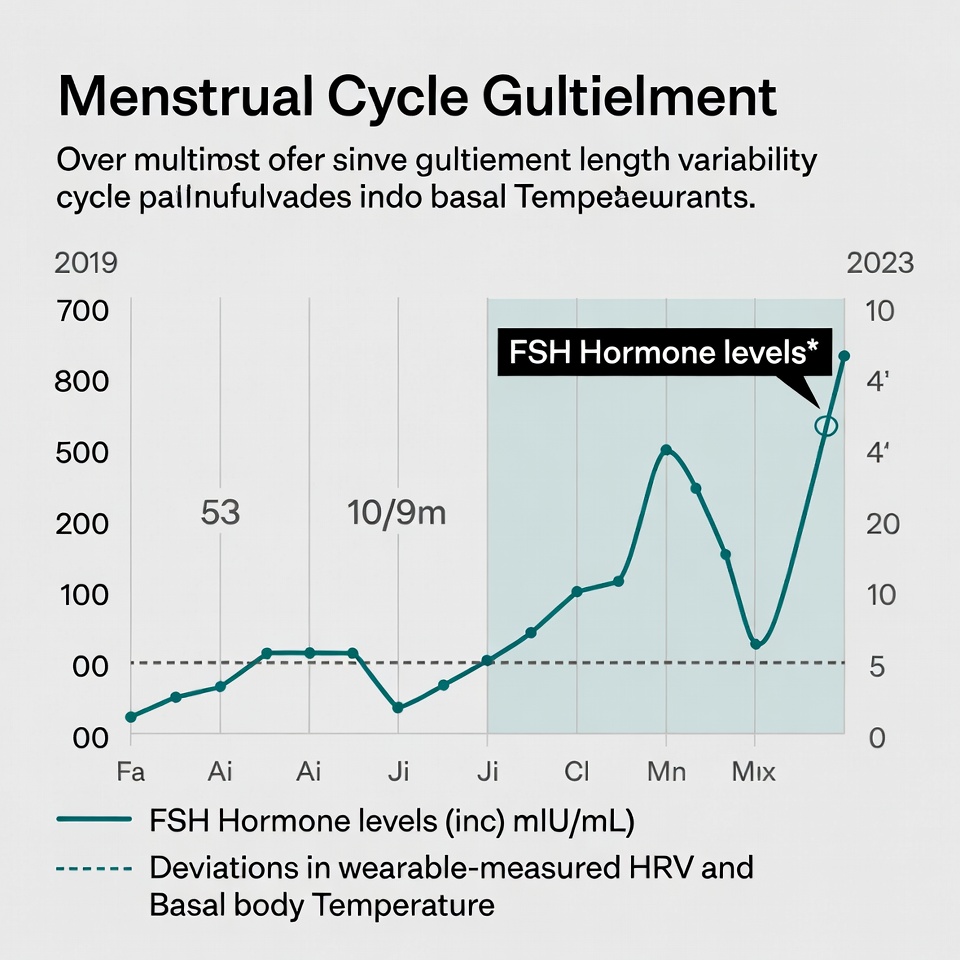

Temporal modeling of patient trajectories

Patient trajectories represent time-resolved sequences of health events across multiple timepoints—hospitalizations, treatment interventions, physiological measurements, and biomarker fluctuations [12]. These longitudinal patterns offer significantly more insight than isolated snapshots. Advanced clinical decision support systems now utilize these trajectories to assess the heterogeneity of disease courses, providing more stable and personalized recommendations.

Traditional AI approaches often fall short when analyzing sequential medical data. Studies show that carefully engineered sequential models outperform standard deep learning in both predictive accuracy and interpretability [12]. For instance, systems tracking biomarker changes over time can identify subtle patterns indicating disease progression before conventional analysis detects problems.

Modern AI clinical decision support employs specialized neural networks tailored for sequential data structures:

-

Long short-term memory networks that process physiological measurements while learning lower-dimensional representations

-

Gated recurrent units with parsimonious structures that reduce overfitting risk with smaller datasets

-

Transformer networks with attention layers that differentially weigh parts of patient trajectories while optimizing outcome predictions [12]

Avoiding point-in-time predictions in unstable conditions

Point-in-time predictions present significant reliability challenges in clinical settings. Research reveals that machine learning models initialized through stochastic processes suffer from reproducibility issues when random seeds change, causing variations in both predictive performance and feature importance [13]. Indeed, such fluctuations can undermine clinician trust in AI recommendations.

Crucially, time intervals between health measurements often contain valuable clinical information. Shorter intervals between recordings typically indicate deterioration in patient status, which AI solutions can leverage to predict future decline [12]. Furthermore, evidence shows that context-aware modeling leads to greater stability in unstable conditions, where patient status changes rapidly.

Using patient history to reduce output variability

Patient history remains fundamental to accurate clinical decisions, even in AI-assisted diagnostics. Studies demonstrate high concordance rates of 76.6% between diagnoses made solely on patient history versus those using comprehensive information [14]. Moreover, when physical findings and test data were added to historical information, diagnostic accuracy increased to 93.3%—a 16.7% improvement [14].

Context-aware applications commonly leverage various elements to stabilize outputs, with patient history data (used in 8 of 23 studies) and user location (8 of 25 studies) being the most prevalent contexts [15]. First of all, these systems can utilize patient-specific medication history to determine prescription safety, generating alerts based on individual lab values and clinical parameters [15].

Systems like Holisticare.io exemplify this approach with their explainable AI platform. By analyzing 800+ biomarkers within their historical context rather than as isolated measurements, the system generates stable, personalized diet and exercise recommendations. Simultaneously, FDB’s Model Context Protocol enables adaptive, context-aware guidance within existing clinical workflows, shifting from static alerts to patient-specific insights that reduce cognitive load [16].

Ante-hoc interpretability vs post-hoc explainability

Transparency forms the backbone of clinical AI adoption. Without clear explanations for how systems process clinical data and reach conclusions, even the most accurate tools face implementation barriers at the bedside.

Ante-hoc interpretability vs post-hoc explainability

Two distinct approaches exist for making AI in healthcare understandable to clinicians. Post-hoc explainability methods like SHAP, LIME, and GradCAM attempt to clarify already-built black box models after decisions are made [17]. Essentially, these methods explain black-box models using black-box methods—creating a problematic situation where explanations might not faithfully represent the model’s actual reasoning [17].

Conversely, ante-hoc (intrinsically interpretable) models such as ProtoPNet and Concept Bottleneck Models are designed from the ground up to be transparent [17]. Although these models sometimes show slightly lower performance compared to black-box counterparts, they offer genuine explanations that reflect the system’s actual decision logic [17]. This distinction between inherently explainable models versus after-the-fact companion models remains poorly addressed in policy frameworks despite its critical importance [6].

HolistiCare's approach to explainable diet/exercise plans

Holisticare.io exemplifies the ante-hoc approach through its AI platform for biomarker analysis. Our system performs routine analysis of 800+ biomarkers while preserving clinician control at every stage [7]. Unlike traditional plans addressing single health aspects, Holisticare combines multiple data types into holistic, clinician-reviewable recommendations spanning diet, exercise, sleep, and mental health domains [7].

This approach acknowledges that clinicians need both the what and the why behind AI recommendations to build trust [18]. By allowing professionals to enhance their practice while maintaining quality standards, the platform facilitates proactive healthcare delivery [19].

Evaluating Real-World Impact of AI-CDS Systems

Rigorous evaluation remains vital for any clinical decision support system that processes biomarker data. Beyond laboratory validation, real-world assessment provides crucial insights into how AI tools actually perform in clinical settings.

Measuring decision consistency and error reduction

Meta-analysis across 87 studies demonstrates that implementing clinical decision support systems significantly reduces medication errors by 53% overall [1]. Yet, effectiveness varies markedly across specialties—from 65.3% error reduction in geriatrics to 39.8% in cardiology [1]. This variation underscores the need for specialty-specific evaluation metrics.

Healthcare organizations often monitor AI performance using traditional metrics like area under the receiver operating characteristics curve (AUROC), sensitivity, specificity, and predictive values [23]. Nonetheless, these conventional measures may prove insufficient for continuously learning systems where inputs and outputs evolve over time.

In unstable clinical conditions, measuring consistency becomes particularly challenging as model performance naturally changes in response to the environment [23]. Firstly, AI systems can create self-fulfilling prophecies when predictions influence treatment decisions in ways that increase the likelihood of the predicted outcome [5]. Alternatively, systems may become victims of their own success when interventions successfully prevent predicted adverse events, making the model appear less accurate over time [5].

Feedback loops and continuous learning in clinical settings

Continuous learning AI adapts to new input data, improving predictions and classifications over time [4]. This adaptive capability offers substantial benefits but introduces unique evaluation challenges, including generalizability issues and potentially unpredictable real-world performance [4].

The learning health system framework provides a structured approach for model refinement through integrating local clinical data [5]. Under this model, prediction systems undergo constant monitoring, validation, and improvement after implementation [5]. Presently, several methods facilitate this ongoing assessment:

-

Pre-clinical silent trials running in the background without influencing care

-

Prospective quality improvement-focused validation within EHRs

-

Rapid cycle comparative effectiveness trials through automated A/B testing [24]

Crucially, clinician involvement significantly enhances system performance. Studies featuring active clinician participation in design and customization showed greater effectiveness in reducing errors [1]. Similarly, provider feedback loops act as built-in review stages where specialist input teaches AI models about care nuances, improving accuracy over time [25].

Equity and fairness in AI-driven recommendations

Equity concerns—defined by WHO as “the absence of systematic disparities between groups with different levels of underlying social advantage” [26]—remain paramount when evaluating AI clinical decision support. Without careful design, these systems risk perpetuating or amplifying existing biases embedded in training data [4].

To mitigate bias risks, evaluation must include fairness metrics across diverse populations [23]. Appropriate measures include monitoring sensitivity, specificity, and positive predictive values among different demographic groups [23]. Equally important, multistakeholder teams should evaluate models at three key stages: development, health system integration, and deployment [27].

Holisticare.io addresses these challenges through its explainable AI platform that analyzes 800+ biomarkers while maintaining equitable performance across diverse populations. By providing transparent reasoning behind each recommendation, clinicians can verify that biomarker analysis remains consistent and fair for all patient groups, ultimately building trust in machine learning outputs applied to clinical data.

Conclusion

Building stable clinical decision support systems requires understanding how clinicians process information and make decisions under uncertainty. Throughout this article, you’ve learned how AI in healthcare must align with human reasoning rather than replace it. Effective systems assist your cognitive workflow by supporting mental simulation and belief updating while presenting multiple treatment options.

Patient histories undoubtedly serve as the foundation for reliable clinical data analysis. Context-aware modeling that examines temporal trajectories instead of isolated snapshots delivers more consistent AI outputs at the bedside. This approach proves especially valuable when monitoring biomarker changes over time. A biomarker, essentially any measurable indicator of biological state or condition, provides crucial insights when analyzed within its historical context rather than as a standalone value.

Explainability stands as another cornerstone for building clinician trust. Systems designed with ante-hoc interpretability allow you to understand both what the recommendation is and why it’s being made. Consequently, this transparency reduces alert fatigue while ensuring you remain the ultimate decision-maker.

Holisticare.io exemplifies these principles through its approach to biomarker analysis. Their platform examines over 800 biomarkers using explainable AI to generate personalized diet and exercise recommendations. Because the system provides transparent reasoning behind each suggestion, you can verify that machine learning outputs remain consistent and fair across diverse patient populations.

Though AI clinical decision support tools continue to evolve rapidly, their success ultimately depends on how well they complement your expertise rather than attempt to replace it. Machine-in-the-loop designs that prioritize human judgment while enhancing it with computational power offer the most promising path forward. As these systems become increasingly integrated into clinical workflows, your ability to understand and effectively use them will transform how you process clinical data and deliver personalized care.

Therefore, the future of AI in healthcare lies not in autonomous algorithms but in thoughtful human-AI collaboration—where technology enhances your abilities while you maintain oversight of the healing relationship that remains at the heart of medicine.

Key Takeaways

Building reliable AI clinical decision support requires understanding how clinicians think and designing systems that enhance rather than replace human judgment.

• Design for human-AI collaboration: Use machine-in-the-loop approaches that keep clinicians in control while leveraging AI’s computational power for complex biomarker analysis.

• Prioritize temporal context over snapshots: Analyze patient trajectories and historical data rather than point-in-time predictions to achieve more stable, consistent AI outputs.

• Build transparency from the ground up: Choose ante-hoc interpretable models over black-box systems with post-hoc explanations to establish genuine clinician trust.

• Measure real-world impact continuously: Implement feedback loops and monitor decision consistency, error reduction, and equity across diverse patient populations.

• Address cognitive biases systematically: Design AI systems that support clinical reasoning by helping clinicians consider multiple hypotheses rather than reinforcing common biases like anchoring or premature closure.

The most successful clinical AI systems, like Holisticare.io‘s explainable biomarker analysis platform, combine transparent reasoning with comprehensive data processing to deliver personalized recommendations that clinicians can understand, trust, and act upon confidently.

FAQs

Q1. How does AI-powered clinical decision support differ from traditional methods? AI-powered clinical decision support systems analyze vast amounts of data, including patient histories and biomarkers, to provide more personalized and context-aware recommendations. Unlike traditional methods, these systems can identify subtle patterns and adapt to changing patient conditions over time.

Q2. What is the importance of explainability in AI clinical decision support systems? Explainability is crucial for building trust and adoption among clinicians. It allows healthcare professionals to understand the reasoning behind AI recommendations, reducing alert fatigue and ensuring that clinicians remain the ultimate decision-makers in patient care.

Q3. How can AI clinical decision support systems reduce cognitive biases in medical decision-making? By presenting multiple treatment options and their potential outcomes, AI systems can help clinicians consider various hypotheses and avoid common biases like anchoring or premature closure. This supports a more thorough and objective decision-making process.

Q4. What role does patient history play in stabilizing AI outputs for clinical decision support? Patient history is fundamental in providing context for AI analysis. By incorporating historical data, AI systems can generate more stable and personalized recommendations, especially when analyzing biomarkers and predicting disease progression.

Q5. How are AI clinical decision support systems evaluated for real-world impact? Evaluation includes measuring decision consistency, error reduction, and equity across diverse patient populations. Continuous learning through feedback loops and clinician involvement is crucial for improving system performance over time.

References

[1] – https://journals.lww.com/qaij/fulltext/2025/01000/effectiveness_of_clinical_decision_support_systems.4.aspx

[2] – https://pmc.ncbi.nlm.nih.gov/articles/PMC9073821/

[3] – https://www.doctronic.ai/blog/augmented-intelligence-vs-ai-in-healthcare-explained/

[4] – https://www.ncbi.nlm.nih.gov/books/NBK605105

[5] – https://www.thelancet.com/journals/landig/article/PIIS2589-7500(25)00062-7/fulltext?rss=yes

[6] – https://www.cigionline.org/documents/2696/no.296.pdf

[7] – https://holisticare.io/

[8] – https://pmc.ncbi.nlm.nih.gov/articles/PMC11301121/

[9] – https://www.nature.com/articles/s41746-025-01725-9

[10] – https://www.sciencedirect.com/science/article/pii/S0149763425001538

[11] – https://www.chapman.edu/research/institutes-and-centers/economic-science-institute/_files/ifree-papers-and-photos/ai-health-belief.pdf

[12] – https://pmc.ncbi.nlm.nih.gov/articles/PMC8686456/

[13] – https://www.sciencedirect.com/science/article/pii/S0169260725003165

[14] – https://pmc.ncbi.nlm.nih.gov/articles/PMC11024399/

[15] – https://pmc.ncbi.nlm.nih.gov/articles/PMC10379857/

[16] – https://www.fdbhealth.com/about-us/media-coverage/2025-10-15-ai-advances-clinical-decision-support-toward-smarter-context-aware-care

[17] – https://xaiworldconference.com/2024/intrinsically-interpretable-explainable-ai/

[18] – https://www.merative.com/blog/ai-in-clinical-decision-support

[19] – https://holisticare.io/features/

[20] – https://synapse-medicine.com/blog/blogpost/fix-alert-fatigue-healthcare

[21] – https://psnet.ahrq.gov/primer/alert-fatigue

[22] – https://premierinc.com/newsroom/blog/reducing-alert-fatigue-in-healthcare

[23] – https://pmc.ncbi.nlm.nih.gov/articles/PMC11630661/

[24] – https://www.nature.com/articles/s44401-025-00029-0

[25] – https://medcitynews.com/2024/04/provider-feedback-loop-the-missing-link-in-ai-development-use-and-adoption/

[26] – https://www.cdc.gov/pcd/issues/2024/24_0245.htm

[27] – https://jamanetwork.com/journals/jama-health-forum/fullarticle/2829644

Disclaimer

The information in this article is provided by HolistiCare for general informational purposes only and is not intended to be a substitute for professional medical advice, diagnosis, or treatment. HolistiCare does not warrant or guarantee the accuracy, completeness, or usefulness of any information contained in this article. Reliance on any information provided here is solely at your own risk.

This content does not create a doctor-patient relationship. Clinical decisions should be made by qualified healthcare professionals using clinical judgment and all available patient information. If you have a medical concern, contact your healthcare provider promptly.

HolistiCare may reference biomarker roles, study examples, products, or tools. Mention of specific tests, biomarkers, therapies, or vendors is for illustrative purposes only and does not imply endorsement. HolistiCare is not responsible for the content of third party sites linked from this article, and inclusion of links does not represent an endorsement of those sites.

Use of HolistiCare software, services, or outputs should be in accordance with applicable laws, regulations, and clinical standards. Where required by law or regulation, clinical use of biomarker information should rely on validated laboratory results and regulatory approvals. HolistiCare disclaims all liability for any loss or damage that may arise from reliance on the information contained in this article.

If you are a patient, please consult your healthcare provider for advice tailored to your clinical situation. If you are a clinician considering HolistiCare for clinical use, contact our team for product specifications, regulatory status, and clinical validation documentation.